Robots txt directives. Yandex robots

The first thing a search bot does when it comes to your site is look for and read the robots.txt file. What is this file? is a set of instructions for a search engine.

It represents text file, with a txt extension, which is located in the root directory of the site. This set of instructions tells the search robot which pages and files on the site to index and which not. It also indicates the main mirror of the site and where to look for the site map.

What is the robots.txt file for? For proper indexing of your site. So that the search does not contain duplicate pages, various service pages and documents. Once you correctly configure directives in robots, you will save your site from many problems with indexing and site mirroring.

How to create the correct robots.txt

It’s quite easy to create robots.txt, let’s create text document in a standard Windows notepad. We write directives for search engines in this file. Next, save this file called “robots” and text extension"txt". Everything can now be uploaded to the hosting, in the root folder of the site. Please note that you can only create one “robots” document for one site. If this file is not on the site, then the bot automatically “decides” that everything can be indexed.

Since there is only one, it contains instructions for all search engines. Moreover, you can write down both separate instructions for each PS, and a general one for all of them at once. The separation of instructions for different search bots is done through the User-agent directive. Let's talk more about this below.

Robots.txt directives

The file “for robots” can contain the following directives for managing indexing: User-agent, Disallow, Allow, Sitemap, Host, Crawl-delay, Clean-param. Let's look at each instruction in more detail.

User-agent directive

User-agent directive— indicates which search engine the instructions will be for (more precisely, which specific bot). If there is a “*”, then the instructions are intended for all robots. If a specific bot is specified, such as Googlebot, then the instructions are intended only for Google's main indexing robot. Moreover, if there are instructions separately for Googlebot and for all other subsystems, then Google will only read its own instructions and ignore the general one. The Yandex bot will do the same. Let's look at an example of writing a directive.

User-agent: YandexBot - instructions only for the main Yandex indexing bot

User-agent: Yandex - instructions for all Yandex bots

User-agent: * - instructions for all bots

Disallow and Allow directives

Disallow and Allow directives— give instructions on what to index and what not. Disallow gives the command not to index a page or an entire section of the site. On the contrary, Allow indicates what needs to be indexed.

Disallow: / - prohibits indexing the entire site

Disallow: /papka/ - prohibits indexing the entire contents of the folder

Disallow: /files.php - prohibits indexing the files.php file

Allow: /cgi-bin – allows cgi-bin pages to be indexed

It is possible and often simply necessary to use special characters in the Disallow and Allow directives. They are needed to specify regular expressions.

Special character * - replaces any sequence of characters. It is assigned by default to the end of each rule. Even if you haven’t registered it, the PS will assign it themselves. Usage example:

Disallow: /cgi-bin/*.aspx – prohibits indexing all files with the .aspx extension

Disallow: /*foto - prohibits indexing of files and folders containing the word foto

The special character $—cancels the effect of the special character “*” at the end of the rule. For example:

Disallow: /example$ - prohibits indexing '/example', but does not prohibit '/example.html'

And if you write it without the special symbol $, then the instruction will work differently:

Disallow: /example - disables both '/example' and '/example.html'

Sitemap Directive

Sitemap Directive— is intended to indicate to the search engine robot where the sitemap is located on the hosting. The sitemap format should be sitemaps.xml. A site map is needed for faster and more complete indexing of the site. Moreover, a sitemap is not necessarily one file, there can be several of them. Direct message format:

Sitemap: http://site/sitemaps1.xml

Sitemap: http://site/sitemaps2.xml

Host directive

Host directive- indicates to the robot the main mirror of the site. Whatever is in the index of site mirrors, you must always specify this directive. If you do not specify it, the Yandex robot will index at least two versions of the site with and without www. Until the mirror robot glues them together. Example entry:

Host: www.site

Host: website

In the first case, the robot will index the version with www, in the second case, without. You are allowed to specify only one Host directive in the robots.txt file. If you enter several of them, the bot will process and take into account only the first one.

A valid host directive must have the following data:

— indicate the connection protocol (HTTP or HTTPS);

- correctly written domain name(you cannot enter an IP address);

— port number, if necessary (for example, Host: site.com:8080).

Directives made incorrectly will simply be ignored.

Crawl-delay directive

Crawl-delay directive allows you to reduce the load on the server. It is needed in case your site begins to fall under the onslaught of various bots. The Crawl-delay directive tells the search bot the waiting time between the end of downloading one page and the start of downloading another page on the site. The directive must come immediately after the "Disallow" and/or "Allow" directive entries. The Yandex search robot can read fractional values. For example: 1.5 (one and a half seconds).

Clean-param directive

Clean-param directive needed by sites whose pages contain dynamic parameters. We are talking about those that do not affect the content of the pages. This is various service information: session identifiers, users, referrers, etc. So, so that there are no duplicates of these pages, this directive is used. She will tell the PS not to re-upload the getting information. The load on the server and the time it takes for the robot to crawl the site will also be reduced.

Clean-param: s /forum/showthread.php

This entry tells the PS that the s parameter will be considered insignificant for all urls that start with /forum/showthread.php. The maximum entry length is 500 characters.

We've sorted out the directives, let's move on to setting up our robots file.

Setting up robots.txt

Let's proceed directly to setting up the robots.txt file. It must contain at least two entries:

User-agent:— indicates which search engine the instructions below will be for.

Disallow:— specifies which part of the site should not be indexed. It can block both a single page of a site and entire sections from indexing.

Moreover, you can indicate that these directives are intended for all search engines, or for one specifically. This is indicated in the User-agent directive. If you want all bots to read the instructions, put an asterisk

If you want to write instructions for a specific robot, you must specify its name.

User-agent: YandexBot

A simplified example of a correctly composed robots file would be like this:

User-agent: *

Disallow: /files.php

Disallow: /section/

Host: website

Where, *

indicates that the instructions are intended for all PS;

Disallow: /files.php– prohibits indexing of the file file.php;

Disallow: /foto/— prohibits indexing the entire “foto” section with all attached files;

Host: website— tells robots which mirror to index.

If you don’t have pages on your site that need to be closed from indexing, then your robots.txt file should be like this:

User-agent: *

Disallow:

Host: website

Robots.txt for Yandex (Yandex)

To indicate that these instructions are intended for the Yandex search engine, you must specify in the User-agent: Yandex directive. Moreover, if we enter “Yandex,” then all Yandex robots will index the site, and if we specify “YandexBot,” then this will be a command only for the main indexing robot.

It is also necessary to specify the “Host” directive, where to indicate the main mirror of the site. As I wrote above, this is done to prevent duplicate pages. Your correct robots.txt for Yandex will be like this.

This requires instructions for work; search engines are no exception to the rule, which is why they came up with a special file called robots.txt. This file should be located in the root folder of your site, or it can be virtual, but it must be opened upon request: www.yoursite.ru/robots.txt

Search engines have long learned to distinguish necessary files html, from the internal sets of scripts of your CMS system, or rather, they have learned to recognize links to content articles and all sorts of rubbish. Therefore, many webmasters already forget to do robots for their sites and think that everything will be fine anyway. Yes, they are 99% right, because if your site does not have this file, then search engines are limitless in their search for content, but there are nuances, the errors of which can be taken care of in advance.

If you have any problems with this file on the site, write comments on this article and I will quickly help you with this, absolutely free. Very often, webmasters make minor mistakes in it, which results in poor indexing for the site, or even exclusion from the index.

What is robots.txt for?

The robots.txt file is created to configure the correct indexing of the site by search engines. That is, it contains rules for permissions and prohibitions on certain paths of your site or type of content. But this is not a panacea. All rules in a robots file are not guidelines follow them exactly, but simply a recommendation for search engines. Google for example writes:

You cannot use the robots.txt file to hide a page from results Google Search. Other pages can link to it and it will still be indexed.

Search robots themselves decide what to index and what not, and how to behave on the site. Each search engine has its own tasks and functions. No matter how much we want, this is not a way to tame them.

But there is one trick that is not directly related to the topic of this article. To completely prevent robots from indexing and showing the page in search results, you need to write:

Let's return to robots. The rules in this file can block or allow access to the following types of files:

- Non-graphic files. Basically these are html files that contain some information. You can close duplicate pages, or pages that don't serve any purpose. useful information(pagination pages, calendar pages, archive pages, profile pages, etc.).

- Graphic files. If you want site images not to be displayed in searches, you can set this in the robots.

- Resource files. Also, using robots, you can block the indexing of various scripts, CSS style files and other unimportant resources. But you should not block resources that are responsible for the visual part of the site for visitors (for example, if you close the css and js of the site that display beautiful blocks or tables, the search robot will not see this and will complain about it).

To clearly show how robots works, look at the picture below:

A search robot, following a site, looks at the indexing rules, then begins indexing according to the recommendations of the file.  Depending on the rules settings, the search engine knows what can be indexed and what cannot.

Depending on the rules settings, the search engine knows what can be indexed and what cannot.

From the robots.txt file intax

To write rules for search engines, directives with various parameters are used in the robots file, with the help of which the robots follow. Let's start with the very first and probably the most important directive:

User-agent directive

User-agent— With this directive you specify the name of the robot that should use the recommendations in the file. There are officially 302 of these robots in the Internet world. Of course, you can write rules for everyone separately, but if you don’t have time for this, just write:

User-agent: *

*-in this example means “All”. Those. your robots.txt file should start with “who exactly” the file is intended for. In order not to bother with all the names of the robots, just write an “asterisk” in the user-agent directive.

I will give you detailed lists of robots of popular search engines:

Google - Googlebot- main robot

Rest Google robotsGooglebot-News— news search robot

Googlebot-Image— robot pictures

Googlebot-Video- robot video

Googlebot-Mobile— robot mobile version

AdsBot-Google— landing page quality checking robot

Mediapartners-Google— AdSense service robot

Yandex - YandexBot- main indexing robot;

Other Yandex robotsDisallow and Allow directives

Disallow- the most basic rule in robots, it is with the help of this directive that you prohibit certain places on your site from being indexed. The directive is written like this:

Disallow:

Very often you can see the Disallow directive: empty, i.e. supposedly telling the robot that nothing is prohibited on the site, index whatever you want. Be careful! If you put / in disallow, then you will completely close the site from indexing.

Therefore, the most standard version of robots.txt, which “allows indexing of the entire site for all search engines,” looks like this:

User-Agent: * Disallow:

If you don't know what to write in robots.txt, but have heard about it somewhere, just copy the code above, save it in a file called robots.txt and upload it to the root of your site. Or don’t create anything, because even without it, robots will index everything on your site. Or read the article to the end, and you will understand what to close on the site and what not.

According to robots rules, the disallow directive must be required.

This directive can prohibit both a folder and an individual file.

If you want ban folder you should write:

Disallow: /folder/

If you want ban a specific file:

Disallow: /images/img.jpg

If you want prohibit certain file types:

Disallow: /*.png$

Regular Expressions are not supported by many search engines. Google supports.

Allow— allowing directive in Robots.txt. It allows the robot to index a specific path or file in a prohibited directory. Until recently, it was used only by Yandex. Google caught up with this and started using it too. For example:

Allow: /content Disallow: /

These directives prevent all site content from being indexed except for the content folder. Or here are some other popular directives lately:

Allow: /template/*.js Allow: /template/*.css Disallow: /template

these values allow all CSS and JS files on the site to be indexed, but they do not allow everything in the folder with your template to be indexed. Over the past year, Google has sent a lot of letters to webmasters with the following content:

Googlebot can't access CSS and JS files on the site

And the corresponding comment: We've discovered an issue with your site that may be preventing it from being crawled. Googlebot cannot process JavaScript code and/or CSS files due to limitations in the robots.txt file. This data is needed to evaluate the performance of the site. Therefore, if access to resources is blocked, this may worsen the position of your site in Search..

If you add the two allow directives that are written in the last code to your Robots.txt, then you will not see similar messages from Google.

And the use of special characters in robots.txt

Now about the signs in the directives. Basic signs (special characters) in prohibiting or allowing this /,*,$

About forward slash “/”

The slash is very deceptive in robots.txt. I have observed an interesting situation several dozen times when, out of ignorance, the following was added to robots.txt:

User-Agent: * Disallow: /

Because they read somewhere about the structure of the site and copied it on their site. But in this case you prohibit indexing of the entire site. To prohibit indexing of a particular directory, with all the internals, you definitely need to put / at the end. If, for example, you write Disallow: /seo, then absolutely all links on your site that contain the word seo will not be indexed. Even though it will be a folder /seo/, even though it will be a category /seo-tool/, even though it will be an article /seo-best-of-the-best-soft.html, all this will not be indexed.

Look at everything carefully / in your robots.txt

Always put / at the end of directories. If you put / in Disallow, you will prevent the entire site from being indexed, but if you do not put / in Allow, you will also prevent the entire site from being indexed. / - in some sense means “Everything that follows the directive /”.

About asterisks * in robots.txt

The special character * means any (including empty) sequence of characters. You can use it anywhere in robots like this:

User-agent: * Disallow: /papka/*.aspx Disallow: /*old

Prohibits all files with the aspx extension in the papka directory, and also prohibits not only the /old folder, but also the /papka/old directive. Tricky? So I don’t recommend that you play around with the * symbol in your robots.

Default in indexing and banning rules file robots.txt is * on all directives!

About the special character $

The $ special character in robots ends the effect of the * special character. For example:

Disallow: /menu$

This rule prohibits '/menu', but does not prohibit '/menu.html', i.e. The file prohibits search engines only from the /menu directive, and cannot prohibit all files with the word menu in the URL.

The host directive

The host rule only works in Yandex, so is optional, it determines the main domain from your site mirrors, if any. For example, you have a domain dom.com, but the following domains have also been purchased and configured: dom2.com, dom3,com, dom4.com and from them there is a redirect to the main domain dom.com

To help Yandex quickly determine which of them is the main site (host), write the host directory in your robots.txt:

Host: website

If your site does not have mirrors, then you don’t have to set this rule. But first check your site by IP address, maybe yours can be opened by it home page, and you should register the main mirror. Or perhaps someone copied all the information from your site and made an exact copy, an entry in robots.txt if it was also stolen will help you with this.

There should be one host entry, and if necessary, with a registered port. (Host: site:8080)

Crawl-delay directive

This directive was created in order to remove the possibility of load on your server. Search engine bots can make hundreds of requests to your site at the same time and if your server is weak, this may cause minor glitches. To prevent this from happening, we came up with a rule for crawl-delay robots - this is the minimum period between loading a page on your site. It is recommended to set the standard value for this directive to 2 seconds. In Robots it looks like this:

Crawl-delay: 2

This directive works for Yandex. In Google, you can set the crawl frequency in the webmaster panel, in the Site Settings section, in the upper right corner with the “gear”.

Clean-param directive

This parameter is also only for Yandex. If site page addresses contain dynamic parameters that do not affect their content (for example: session identifiers, users, referrers, etc.), you can describe them using the Clean-param directive.

Using this information, the Yandex robot will not repeatedly reload duplicate information. This will increase the efficiency of crawling your site and reduce the load on the server.

For example, the site has pages:

www.site.com/some_dir/get_book.pl?ref=site_1&book_id=123

Parameter ref is used only to track which resource the request was made from and does not change the content; the same page with the book book_id=123 will be shown at all three addresses. Then, if you specify the directive as follows:

User-agent: Yandex Disallow: Clean-param: ref /some_dir/get_book.pl

The Yandex robot will reduce all page addresses to one:

www.site.com/some_dir/get_book.pl?ref=site_1&book_id=123,

If a page without parameters is available on the site:

www.site.com/some_dir/get_book.pl?book_id=123

then everything will come down to it when it is indexed by the robot. Other pages on your site will be crawled more often since there is no need to refresh pages:

www.site.com/some_dir/get_book.pl?ref=site_2&book_id=123

www.site.com/some_dir/get_book.pl?ref=site_3&book_id=123

#for addresses like: www.site1.com/forum/showthread.php?s=681498b9648949605&t=8243 www.site1.com/forum/showthread.php?s=1e71c4427317a117a&t=8243 #robots.txt will contain: User-agent: Yandex Disallow: Clean-param: s /forum/showthread.php

Sitemap Directive

With this directive you simply specify the location of your sitemap.xml. The robot remembers this, “says thank you,” and constantly analyzes it along a given path. It looks like this:

Sitemap: http://site/sitemap.xml

Now let's take a look general questions, which arise when compiling robots. There are many such topics on the Internet, so we will analyze the most relevant and most common ones.

Correct robots.txt

There is a lot of “correct” in this word, because for one site on one CMS it will be correct, but on another CMS it will produce errors. “Correctly configured” is individual for each site. In Robots.txt, you need to close from indexing those sections and those files that are not needed by users and do not provide any value to search engines. The simplest and most correct option robots.txt

User-Agent: * Disallow: Sitemap: http://site/sitemap.xml User-agent: Yandex Disallow: Host: site.com

This file contains the following rules: settings for prohibition rules for all search engines (User-Agent: *), indexing of the entire site is fully allowed (“Disallow:” or you can specify “Allow: /”), the host of the main mirror for Yandex is specified (Host : site.ncom) and the location of your Sitemap.xml (Sitemap: .

R obots.txt for WordPress

Again, there are many questions, one site could be an online store, another a blog, a third a landing page, a fourth a business card site for a company, and all this could be on CMS WordPress and the rules for robots will be completely different. Here is my robots.txt for this blog:

User-Agent: * Allow: /wp-content/uploads/ Allow: /wp-content/*.js$ Allow: /wp-content/*.css$ Allow: /wp-includes/*.js$ Allow: / wp-includes/*.css$ Disallow: /wp-login.php Disallow: /wp-register.php Disallow: /xmlrpc.php Disallow: /template.html Disallow: /wp-admin Disallow: /wp-includes Disallow: /wp-content Disallow: /category Disallow: /archive Disallow: */trackback/ Disallow: */feed/ Disallow: /?feed= Disallow: /job Disallow: /?.net/sitemap.xml

There are a lot of settings here, let's look at them together.

Allow in WordPress. The first allowing rules are for the content that is needed by users (these are pictures in the uploads folder) and robots (these are CSS and JS for displaying pages). It is CSS and JS that Google often complains about, so we left them open. It was possible to use the all files method by simply inserting “/*.css$”, but the prohibiting line of these particular folders where the files were located did not allow them to be used for indexing, so I had to specify the path to the prohibiting folder in full.

Allow always points to the path of content prohibited in Disallow. If something is not prohibited for you, you shouldn’t write allow to it, supposedly thinking that you are giving an impetus to search engines, like “Come on, here’s a URL for you, index it faster.” It won't work that way.

Disallow in WordPress. There are a lot of things that need to be prohibited in a WP CMS. Lots of different plugins, lots of different settings and themes, lots of scripts and various pages, which do not contain any useful information. But I went further and completely forbade indexing everything on my blog, except for the articles themselves (posts) and pages (about the Author, Services). I even closed the categories on the blog, I will open them when they are optimized for queries and when there is a text description for each of them, but now these are just duplicate post previews that search engines don’t need.

Well, Host and Sitemap are standard directives. I just needed to make the host separately for Yandex, but I didn’t bother about it. Now we’ll probably finish with Robots.txt for WP.

How to create robots.txt

It's not as difficult as it seems at first glance. You just need to take a regular notepad (Notepad) and copy the data for your site there according to the settings from this article. But if this is difficult for you, there are resources on the Internet that allow you to generate robots for your sites:

No one will tell you more about your Robots.txt like these comrades. After all, it is for them that you create your “forbidden file.”

Now let's talk about some minor errors that may exist in robots.

- « Empty string" - it is unacceptable to make an empty line in the user-agent directive.

- At conflict between two directives with prefixes of the same length, the directive takes precedence Allow.

- For each robots.txt file is processed only one Host directive. If several directives are specified in the file, the robot uses the first one.

- Directive Clean-Param is cross-sectional, so it can be specified anywhere in the robots.txt file. If several directives are specified, all of them will be taken into account by the robot.

- Six Yandex robots do not follow the rules of Robots.txt (YaDirectFetcher, YandexCalendar, YandexDirect, YandexDirectDyn, YandexMobileBot, YandexAccessibilityBot). To prevent them from being indexed on the site, you should make separate user-agent parameters for each of them.

- User-agent directive, must always be written above the prohibiting directive.

- One line, for one directory. You cannot write multiple directories on one line.

- File name it should only be like this: robots.txt. No Robots.txt, ROBOTS.txt, and so on. Only small letters in the title.

- In the directive host you should write the path to the domain without http and without slashes. Incorrect: Host: http://www.site.ru/, Correct: Host: www.site.ru

- When the site uses a secure protocol https in the directive host(for the Yandex robot) it is necessary to specify exactly the protocol, so Host: https://www.site.ru

This article will be updated as interesting questions and nuances become available.

I was with you, lazy Staurus.

Hello, dear readers of the “Webmaster’s World” blog!

File robots.txt– this is very important file, which directly affects the quality of indexing of your site, and therefore its search promotion.

That is why you must be able to correctly format robots.txt, so as not to accidentally prohibit any important documents of the Internet project from being included in the index.

How to format the robots.txt file, what syntax should be used, how to allow and deny documents to the index will be discussed in this article.

About the robots.txt file

First, let's find out in more detail what kind of file this is.

File robots is a file that shows search engines which pages and documents on a site can be added to the index and which cannot. It is necessary because initially search engines try to index the entire site, and this is not always correct. For example, if you are creating a site on an engine (WordPress, Joomla, etc.), then you will have folders that organize the work of the administrative panel. It is clear that the information in these folders cannot be indexed; in this case, the robots.txt file is used, which restricts access to search engines.

The robots.txt file also contains the address of the site map (it improves indexing by search engines), as well as the main domain of the site (the main mirror).

Mirror– this is an absolute copy of the site, i.e. when there is one site, then they say that one of them is the main domain, and the other is its mirror.

Thus, the file has quite a lot of functions, and important ones at that!

Robots.txt file syntax

The robots file contains blocks of rules that tell a particular search engine what can be indexed and what cannot. There can be one block of rules (for all search engines), but there can also be several of them - for some specific search engines separately.

Each such block begins with a “User-Agent” operator, which indicates which search engine these rules apply to.

User-Agent:A

(rules for robot “A”)

User-Agent:B

(rules for robot “B”)

The example above shows that the “User-Agent” operator has a parameter - the name of the search engine robot to which the rules are applied. I will list the main ones below:

After “User-Agent” there are other operators. Here is their description:

All operators have the same syntax. Those. operators should be used as follows:

Operator1: parameter1

Operator2: parameter2

…

Thus, first we write the name of the operator (no matter in capital or small letters), then we put a colon and, separated by a space, indicate the parameter of this operator. Then, starting on a new line, we describe operator two in the same way.

Important!!! An empty line will mean that the block of rules for this search engine is complete, so do not separate statements with an empty line.

Example robots.txt file

Let's look at a simple example of a robots.txt file to better understand the features of its syntax:

User-agent: Yandex

Allow: /folder1/

Disallow: /file1.html

Host: www.site.ru

User-agent: *

Disallow: /document.php

Disallow: /folderxxx/

Disallow: /folderyyy/folderzzz

Disallow: /feed/

Sitemap: http://www.site.ru/sitemap.xml

Now let's look at the described example.

The file consists of three blocks: the first for Yandex, the second for all search engines, and the third contains the sitemap address (applied automatically for all search engines, so there is no need to specify “User-Agent”). We allowed Yandex to index the folder “folder1” and all its contents, but prohibited it from indexing the document “file1.html” located in the root directory on the hosting. We also indicated the main domain of the site to Yandex. The second block is for all search engines. There we banned the document "document.php", as well as the folders "folderxxx", "folderyyy/folderzzz" and "feed".

Please note that in the second block of commands to the index we did not prohibit the entire “folderyyy” folder, but only the folder inside this folder – “folderzzz”. Those. we indicated full path for "folderzzz". This should always be done if we prohibit a document located not in the root directory of the site, but somewhere inside other folders.

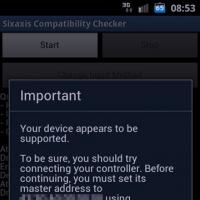

It will take less than two minutes to create:

The created robots file can be checked for functionality in the Yandex webmaster panel. If errors are suddenly found in the file, Yandex will show it.

Be sure to create a robots.txt file for your site if you don’t already have one. This will help your site develop in search engines. You can also read our other article about the method of meta tags and .htaccess.

The purpose of this guide is to help webmasters and administrators use robots.txt.

Introduction

The robot exemption standard is very simple at its core. In short, it works like this:

When a robot that follows the standard visits a site, it first requests a file called “/robots.txt.” If such a file is found, the Robot searches it for instructions prohibiting indexing certain parts of the site.

Where to place the robots.txt file

The robot simply requests the URL “/robots.txt” on your site; the site in this case is a specific host on a specific port.

| Site URL | Robots.txt file URL |

| http://www.w3.org/ | http://www.w3.org/robots.txt |

| http://www.w3.org:80/ | http://www.w3.org:80/robots.txt |

| http://www.w3.org:1234/ | http://www.w3.org:1234/robots.txt |

| http://w3.org/ | http://w3.org/robots.txt |

There can only be one file “/robots.txt” on the site. For example, you should not place the robots.txt file in user subdirectories - robots will not look for them there anyway. If you want to be able to create robots.txt files in subdirectories, then you need a way to programmatically collect them into a single robots.txt file located at the root of the site. You can use .

Remember that URLs are case sensitive and the file name “/robots.txt” must be written entirely in lowercase.

| Wrong location of robots.txt | |

| http://www.w3.org/admin/robots.txt | |

| http://www.w3.org/~timbl/robots.txt | The file is not located at the root of the site |

| ftp://ftp.w3.com/robots.txt | Robots do not index ftp |

| http://www.w3.org/Robots.txt | The file name is not in lowercase |

As you can see, the robots.txt file should be placed exclusively at the root of the site.

What to write in the robots.txt file

The robots.txt file usually contains something like:

User-agent: *

Disallow: /cgi-bin/

Disallow: /tmp/

Disallow: /~joe/

In this example, indexing of three directories is prohibited.

Note that each directory is listed on a separate line - you cannot write "Disallow: /cgi-bin/ /tmp/". You also cannot split one Disallow or User-agent statement into several lines, because Line breaks are used to separate instructions from each other.

Regular expressions and wildcards cannot be used either. The “asterisk” (*) in the User-agent instruction means “any robot”. Instructions like “Disallow: *.gif” or “User-agent: Ya*” are not supported.

The specific instructions in robots.txt depend on your site and what you want to prevent from being indexed. Here are some examples:

Block the entire site from being indexed by all robots

User-agent: *

Disallow: /

Allow all robots to index the entire site

User-agent: *

Disallow:

Or you can simply create an empty file “/robots.txt”.

Block only a few directories from indexing

User-agent: *

Disallow: /cgi-bin/

Disallow: /tmp/

Disallow: /private/

Prevent site indexing for only one robot

User-agent: BadBot

Disallow: /

Allow one robot to index the site and deny all others

User-agent: Yandex

Disallow:

User-agent: *

Disallow: /

Deny all files except one from indexing

This is quite difficult, because... there is no “Allow” statement. Instead, you can move all files except the one you want to allow for indexing into a subdirectory and prevent it from being indexed:

User-agent: *

Disallow: /docs/

Or you can prohibit all files prohibited from indexing:

User-agent: *

Disallow: /private.html

Disallow: /foo.html

Disallow: /bar.html

First, I’ll tell you what robots.txt is.

Robots.txt– a file that is located in the root folder of the site, where special instructions for search robots are written. These instructions are necessary so that when entering the site, the robot does not take into account the page/section; in other words, we close the page from indexing.

Why do we need robots.txt?

The robots.txt file is considered a key requirement for SEO optimization of absolutely any website. The absence of this file may negatively affect the load from robots and slow indexing and, even moreover, the site will not be completely indexed. Accordingly, users will not be able to access pages through Yandex and Google.

Impact of robots.txt on search engines?

Search engines(especially Google) will index the site, but if there is no robots.txt file, then, as I said, not all pages. If there is such a file, then the robots are guided by the rules that are specified in this file. Moreover, there are several types of search robots; some can take into account the rule, while others ignore it. In particular, the GoogleBot robot does not take into account the Host and Crawl-Delay directives, the YandexNews robot has recently stopped taking into account the Crawl-Delay directive, and the YandexDirect and YandexVideoParser robots ignore generally accepted directives in robots.txt (but take into account those that are written specifically for them).

The site is loaded the most by robots that load content from your site. Accordingly, if we tell the robot which pages to index and which to ignore, as well as at what time intervals to load content from the pages (this applies more to large sites that have more than 100,000 pages in the search engine index). This will make it much easier for the robot to index and load content from the site.

Files that are unnecessary for search engines include files that belong to the CMS, for example, in Wordpress – /wp-admin/. In addition, ajax, json scripts responsible for pop-up forms, banners, captcha output, and so on.

For most robots, I also recommend blocking all Javascript and CSS files from indexing. But for GoogleBot and Yandex, it is better to index such files, since they are used by search engines to analyze the convenience of the site and its ranking.

What is a robots.txt directive?

Directives– these are the rules for search robots. The first standards for writing robots.txt and, accordingly, appeared in 1994, and the extended standard in 1996. However, as you already know, not all robots support certain directives. Therefore, below I have described what the main robots are guided by when indexing website pages.

What does User-agent mean?

This is the most important directive that determines which search robots will follow further rules.

For all robots:

For a specific bot:

User-agent: Googlebot

The register in robots.txt is not important, you can write both Googlebot and googlebot

Google search robots

Yandex search robots

|

Yandex's main indexing robot |

|

|

Used in the Yandex.Images service |

|

|

Used in the Yandex.Video service |

|

|

Multimedia data |

|

|

Blog Search |

|

|

A search robot accessing a page when adding it through the “Add URL” form |

|

|

robot that indexes website icons (favicons) |

|

|

Yandex.Direct |

|

|

Yandex.Metrica |

|

|

Used in the Yandex.Catalog service |

|

|

Used in the Yandex.News service |

|

|

YandexImageResizer |

Mobile services search robot |

Search robots Bing, Yahoo, Mail.ru, Rambler

Disallow and Allow directives

Disallow blocks sections and pages of your site from indexing. Accordingly, Allow, on the contrary, opens them.

There are some peculiarities.

First, the additional operators are *, $ and #. What are they used for?

“*” – this is any number of characters and their absence. By default, it is already at the end of the line, so there is no point in putting it again.

“$” – indicates that the character before it should come last.

“#” – comment, the robot does not take into account everything that comes after this symbol.

Examples of using Disallow:

Disallow: *?s=

Disallow: /category/

Respectively search robot will close pages like:

But pages like this will be open for indexing:

Now you need to understand how nesting rules are executed. The order in which directives are written is absolutely important. Inheritance of rules is determined by which directories are specified, that is, if we want to block a page/document from indexing, it is enough to write a directive. Let's look at an example

This is our robots.txt file

Disallow: /template/

This directive can also be specified anywhere, and several sitemap files can be specified.

Host directive in robots.txt

This directive is necessary to indicate the main mirror of the site (often with or without www). Please note that the host directive is specified without http protocol://, but with the https:// protocol. The directive is taken into account only by Yandex and Mail.ru search robots, and other robots, including GoogleBot, will not take the rule into account. Host should be specified once in the robots.txt file

Example with http://

Host: website.ru

Example with https://

Crawl-delay directive

Sets the time interval for indexing site pages by a search robot. The value is indicated in seconds and milliseconds.

Example:

It is used mostly on large online stores, information sites, portals, where site traffic is from 5,000 per day. It is necessary for the search robot to make an indexing request within a certain period of time. If this directive is not specified, it can create a serious load on the server.

The optimal crawl-delay value is different for each site. For search engines Mail systems, Bing, Yahoo value can be set to a minimum value of 0.25, 0.3, since these search engine robots can crawl your site once a month, 2 months, and so on (very rarely). For Yandex, it is better to set a higher value.

If the load on your site is minimal, then there is no point in specifying this directive.

Clean-param directive

The rule is interesting because it tells the crawler that pages with certain parameters do not need to be indexed. 2 arguments are prescribed: Page URL and parameter. This directive is supported search engine Yandex.

Example:

Disallow: /admin/

Disallow: /plugins/

Disallow: /search/

Disallow: /cart/

Disallow: *sort=

Disallow: *view=

User-agent: GoogleBot

Disallow: /admin/

Disallow: /plugins/

Disallow: /search/

Disallow: /cart/

Disallow: *sort=

Disallow: *view=

Allow: /plugins/*.css

Allow: /plugins/*.js

Allow: /plugins/*.png

Allow: /plugins/*.jpg

Allow: /plugins/*.gif

User-agent: Yandex

Disallow: /admin/

Disallow: /plugins/

Disallow: /search/

Disallow: /cart/

Disallow: *sort=

Disallow: *view=

Allow: /plugins/*.css

Allow: /plugins/*.js

Allow: /plugins/*.png

Allow: /plugins/*.jpg

Allow: /plugins/*.gif

Clean-Param: utm_source&utm_medium&utm_campaign

In the example, we wrote down the rules for 3 different bots.

Where to add robots.txt?

Added to the root folder of the site. In addition, so that you can follow the link:

How to check robots.txt?

Yandex Webmaster

On the Tools tab, select Robots.txt Analysis and then click check

Google Search Console

On the tab Scanning choose Robots.txt file inspection tool and then click check.

Conclusion:

The robots.txt file must be present on every website being promoted, and only its correct configuration will allow you to obtain the necessary indexing.

And finally, if you have any questions, ask them in the comments under the article and I’m also wondering, how do you write robots.txt?