Remove url from search. Removing pages from the search engine index

We have released a new book “Content Marketing in social networks: How to get into your subscribers’ heads and make them fall in love with your brand.”

To achieve your goals when working with a website, you need to take into account a lot of different subtleties. During optimization, due to inattention, it happens that the index contains information that is not for the eyes of Internet surfers. It is logical that this kind of content needs to be gotten rid of. In this article we will look at ordinary cases where such a need arises and, of course, we will learn how to delete a page from Yandex search.

Reasons for removing web pages from search

We will not analyze the problems of giants, such as the story of the famous mobile operator, the name of which I will not advertise, I will only say that it begins with “mega” and ends with “background”. When the latter’s pages with SMS messages from users were indexed and included in the search. We will also not focus on the problems of online stores, where you can periodically find personal data of users and details of their orders in open access. And so on.

We will analyze the pressing problems of ordinary entrepreneurs who want to solve their problems:

- Doubles. Duplicate content interferes with website promotion, this is no secret. The reasons for this may vary. You can see more details in our .

- The information has lost its relevance. For example, there was some kind of one-time event, but it passed. The page needs to be deleted.

- "Secrets." Data that is not for everyone. Let us recall the situation with the telecom operator. Such pages must be hidden from onlookers.

- Change URL address ov. Whether it’s moving a site or setting up a CNC, in any case you will have to get rid of old pages and provide the search engine with new ones.

- When moving to a new domain name you have decided to completely remove the site from the Yandex search engine so that the uniqueness of the content does not suffer.

Actually reasons to delete saved pages from search engines may be many times more. The list is intended to illustrate typical problems only.

How to delete a page from the Yandex search engine

There are two ways things could develop. Long (but lazy) and accelerated (you have to move a little).

- The long way

If time is not in a hurry and there are more important things to do, then you can simply delete the page through the content management system (also known as the admin panel or CMS). In this case, going to a URL that no longer exists, search robot will find that the server responds to it with a 404 code - the page does not exist, which means it needs to be thrown out of the search, which will happen over time without your intervention.

- Fast way

A kind of continuation of the first. After removal from the CMS, you need to use the Yandex service delete URL . This will lead to a prompt response from the PS and in the near future unnecessary information will disappear from the index.

But what if you need to remove a page from the search engine, while leaving it on the site itself?

How to delete a page in the Yandex search engine without deleting it from the site

First, block the page from indexing using the robots. txt, I'm sure you've already encountered it. Inside the file you need to write the following:

User-agent: Yandex

Disallow: /i-hate-my-page

This will block content from indexing whose URL is www.domain.ru/i-hate-my-page

Secondly, since robots. txt does not contain a strict set of rules for the robot, but is rather advisory in nature, then the usual Disallow may not be enough. Therefore, to be sure, write in html code pages are as follows:

Please note that the head tags are not here for looks. Meta tag robotos should be located between tags

.The culmination will be adding the address to the already familiar Yandex service Remove URL, which we already know how to use.

How to remove a site from the Yandex search engine

In order to completely remove a site from the search database, you need to go through the same steps as to delete a page, but with some nuances.

- Block the entire site from indexing by adding to the robots. txt lines

User-agent: Yandex

Disallow: / - Delete pages using the native Delete URL service.

- Get rid of all links leading to your site.

- Wait for re-indexing.

If you no longer need the site at all, delete all the files that are on the hosting and forget about it. IN as a last resort, contact the PS support service.

Bottom line

I would like to say that the procedure for deleting saved pages from the search is not very difficult and sometimes it is simply necessary. I hope that site owners who do not want to delve too deeply into details will be able to find in this article the answer to the question of how to remove unnecessary web pages from Yandex search.

Hope this was helpful!

There are situations when you don’t need to quickly index pages, but remove them from search results.

For example, when you bought a domain name and create a completely new website on it, which is in no way related to the previous one. Or pages that shouldn't be there got into the index. Or you simply decided to close a section on the site that is no longer relevant.

Today we are talking about how to remove pages from search engine index Google systems and Yandex.

We will take a detailed look at the main ways you can quickly remove pages from search results.

Let's get straight to the point!

Ways to remove pages from the search engine index:

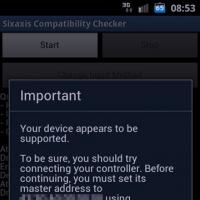

1. Removal in Webmaster Tools

The simplest option for removing pages from the index is to delete them using and .

Removing pages from searchGoogle

We go to:

Then we send a request to delete the page.

The question immediately arises, how long to wait?

Deleting pages is very fast. Here is the same site, 8 hours after adding:

Even if the volume of pages is not small, you can remove them from the index extremely quickly. For example, the same site, a little earlier:

That is, there were more than 1200 pages, then it became a couple of dozen. And now there is practically only one main page left.

Removing pages from Yandex search

Add the desired address of the page that needs to be deleted.

As a result, we can get the following answer:

That is, you will still have to do the points described below, which will speed up the process of deleting pages from the Yandex index.

The function: “Disallow:” is responsible for non-indexing or exclusion of pages or sections.

You can block both individual pages and entire sections of the site from indexing. That is, what you do not want to be indexed by search engines. Robots.txt is taken into account by Google and Yandex.

For example, closing a section:

Closing the page:

Disallow: /page1.html

Closing search pages:

Disallow: /site/?s*

There will soon be a very voluminous post about robots.txt with many technical points that are at least worth knowing and applying to solve website problems.

3. 404 error

It is also important to create a 404 error page so that the server returns 404. Then, the next time the site is indexed by search bots, they will throw these pages out of the index.

A 404 error server response is generated by default, since if it is not done, there may be many duplicate pages on the site, which will have a bad impact on traffic growth.

I would like to note that deleting pages from the index in this way may take time, since you need to wait for search engine bots.

These are the main points that can affect the removal of pages from the search engine index.

In practice, it very rarely uses anything else, like:

- robots meta tag

- X-robots-Tag

But to remove required pages from the index for sure, it is better to use all 3 methods described above. Then it will be an order of magnitude faster.

You can also simply delete pages and leave only a stub site, but it is not a fact that the pages will disappear from the index very quickly, so it is better to help them with this.

We've released a new book, Social Media Content Marketing: How to Get Inside Your Followers' Heads and Make Them Fall in Love with Your Brand.

Often during the creation and operation of a website, pages appear that are deleted or with changed addresses. The problem is that if the search engine managed to index them before deleting them, when requested, the system will give the user all the information contained on the page. Or official pages become public knowledge - but they may contain information that is not intended for prying eyes. So how can you avoid such trouble, how can you delete a page in Google and other search engines and make what is visible invisible?

Incidents with outdated pages are found all over the Internet. A search can reveal personal information of clients, all their orders in detail. After all, quite often we are asked to fill out forms with very personal information that should be hidden from everyone. We will tell you how to avoid such mistakes in this article.

Reasons why search engines take us to non-existent pages

The most common reason is that the page has been deleted and does not exist. And the webmaster forgot to remove it from the Yandex index (or other subsystems) or the site is maintained by a beginner who simply does not know how to delete a page in a search engine. Or, due to manual editing of the site, the page has become unavailable. This is often the sin of novice website administrators, neglecting the work of their resource.

Let's continue the conversation about the structure of the site. Often content management systems (so-called CMS) are not initially configured optimally. For example, when installing a site on the hosting of the well-known blogging engine WordPress, the resource does not meet the optimization requirements, since the url consists of digital and alphabetic identifiers. The webmaster has to make changes to the structure of the pages on the CNC, as a result of which many non-working addresses may appear, to which the search engine will display when requested.

Therefore, do not forget to monitor changed addresses and use a 301 redirect, which will direct requests from the old address to the new ones. Ideally, all site settings should be completed before it opens. And here a local server will help.

Initially incorrect configuration of the server. When requesting a non-existent page, an error code of 404 or 3xx should be returned.

Reasons why seemingly deleted pages appear in the index

Sometimes it seems to you that all unnecessary or extra pages are closed from prying eyes, but they are nevertheless perfectly tracked by search engines without any restrictions. Perhaps this is when:

- incorrect spelling of the robots.txt file;

- the administrator removed extra pages from the index too late - search engines had already indexed them;

- if third-party sites or other pages of the same site link to pages with addresses before they are changed by the webmaster.

So, there can be a great many incidents. Let's consider options for solving the problem.

How remove a page from the index of Yandex and other search engines

- robots.txt

A favorite way for many to remove pages from the index is to use the robots.txt file. Many opuses have been written on the topic correct settings this file. We won’t rewrite them here, but this file will help you conveniently hide an entire section of the site or a separate file from searches.

This method also requires waiting - until the search engine robot reads the file and crosses out the page or section from the search. As mentioned earlier, the presence external links on closed pages make them available for viewing, be careful. - Meta robots tag

This tag is set in the HTML code of the page itself. The method is convenient in its simplicity. I recommend it to novice webmasters who are creating their website page by page. The tag can be easily added to all necessary pages whose contents need to be hidden from prying eyes. At the same time, the robots.txt file is not clogged with unnecessary instructions, remaining simple and understandable. This method of removing it from search engine visibility has one drawback - it is difficult to apply to a dynamic site. Such resources, thanks to the templated connection of modules, can close all pages of the resource instead of just a few selected ones - you need to be careful here! - X-Robots-Tag

The method is currently only suitable for foreign search engines such as Google. Yandex does not yet support this tag, maybe something will change in the future. It is very similar to the robots meta tag, its main difference is that we write the tag in the http headers themselves, hidden in the page code. Sometimes use this method very convenient, but do not forget that the pages are only partially closed. The Yandex search engine will continue to find them without problems. This technique is often used by black SEO experts to hide pages with links from search engines.

How to delete a page in Google and Yandex search engines

- 404 error. The simplest way to remove pages from the search engine index is to actually delete it and apply a rule so that at this address the server issues a 404 error, informing us that the requested page does not exist. Most CMSs, WordPress is no exception, when deleting a page, correctly inform the robot that the document is missing and needs to be removed from the search engine index.

However, the 404 code will only take effect after the robot visits the resource. It all depends on when the page was indexed, this can sometimes take quite a significant amount of time.

This method is not suitable if the page is still needed for the site to function, for example, it is service information. I recommend using the methods below. - Manual removal. A reliable, fast and simple method, and at the same time accessible to everyone, is manual. Links for webmasters where you can remove pages from Yandex and Google:

- Yandex;

- Google.

Only here there is a condition - for this method to work, the necessary pages must first be closed from the robot by any of the methods already listed above. If the resource is large, then this method is not fast enough in execution. You will have to delete each page individually. But it is simple and understandable even for beginners. In practice, Google spends several hours processing a request, Yandex acts a little slower, you will have to wait for an update. And yet, when compared with previous methods of deleting pages in a search engine, this one is the fastest.

A properly designed website structure makes it easier to operate, improves visibility for search engines and creates a positive impression of the site among its visitors. After all, what could be more important for a resource than reputation and trust in it.

How to remove indexed pages from Yandex and Google search?

Sometimes, for some reason, it is necessary to remove pages that were previously indexed by the search engine. There are several ways.

1. Deny further indexing of the page.

You can prevent further indexing of the page in your robots.txt file using the following line:

Dis: your_page

For example:

Disallow: /shops/mylikes.html

This line prevents search engines from indexing the page mylikes.html located in the directory (folder) shop.

If you want to completely prohibit indexing of the site, and not just its individual pages, add the following to robots.txt:

This method will prevent search engines from crawling your sites. However, what is the disadvantage of such a system - all pages that were removed from indexing at the time the corresponding lines were added to robots.txt will be stored in the search engine cache and also displayed in search results.

2. Removing site pages from the Yandex and Google cache.

2.1. Yandex

In order to completely remove pages of your site from indexing and prohibit search, you must first delete (so that the URL of your page sends 404) or disable the necessary pages in robots.txt. Then follow the link http://webmaster.yandex.ru/delurl.xml, where in the appropriate form enter the path to the page and Yandex will remove your page from the search results and, accordingly, from the cache.

For example, if you want to remove the entire page from the search results mylikes.html enter:

http://your.site/shops/mylikes.html

After this page mylikes.html, will be added to the deletion queue and in a few days will leave the Yandex cache. In the same way, you can delete the entire site from the cache; to do this, enter in the form:

http://your.site/

2.2. Google

To remove pages from Google search necessary:

1. Log in to the Google Webmaster Panel.

2. In the list of sites you have added, select the one whose pages you want to remove from the search.

3. On the left, in the "tab" Optimization" choose " Remove URLs".

4. Click " Create a new deletion request", then in the window that opens, enter the path to the page, case sensitive.

Be careful! Specify the path in relative form. Those. If you want to delete a page http://your.site/shops/mylikes.html, enter into the form only /shops/mylikes.html!!!

If you want to remove the entire site from search results, enter in the form / . With the help of this one sign you will make a request to remove the entire site.

5. Expect your pages to disappear from searches soon.

Sometimes it is necessary to remove a site page from search results, for example, if it contains confidential information or is or has been deleted.

Notify Yandex that the page needs to be deleted

You can do this in several ways:

If a page is removed from the site

- Prevent the page from being indexed using the Disallow directive in your robots.txt file.

Configure the server so that when the robot accesses the page address, it sends an HTTP status code 404 Not Found, 403 Forbidden or 410 Gone.

If the page should not appear in search

- Prevent the page from being indexed using the Disallow directive in your robots.txt file.

- Prevent pages from being indexed using the noindex meta tag.

If you have configured the server to respond to the 4XX code or used the noindex meta tag for many pages, the robot will learn about the change in each page gradually. In this case, prevent pages from being indexed using the Disallow directive.

When a Yandex robot visits a site and learns about the ban on indexing, the page will disappear from the search results within a week. After this, the address of the deleted page will appear in the list of excluded ones in the Indexing → Pages in Yandex.Webmaster search section.

The robot will continue to visit the page for some time to make sure its status has not changed. If the page remains inaccessible, it will disappear from the robot's database.

The page may reappear in search results if you remove the indexing restriction in your robots.txt file or the server response changes to 200 OK.

If a page is removed from a site due to copyright infringement, the order in which it is removed from search results does not change and is not a priority.

Speed up page removal from search

If, after you have blocked the indexing of pages, the robot has not yet visited your site, you can inform Yandex to remove the page using the “Remove pages from search” tool in Yandex.Webmaster.

Remove individual website pages from Yandex search

add and confirm the site in Yandex.Webmaster.

Make sure your robots.txt file contains a Disallow directive for the pages you want to remove. If the robot finds other directives in robots.txt for the pages specified in Yandex.Webmaster, then, despite them, it will delete the pages from the search.

You can delete a directory, all site pages, or pages with parameters in the URL. To do this, add and confirm the site in Yandex.Webmaster.

In Yandex.Webmaster, go to the Tools → Removing pages from search page.

Make sure your robots.txt file contains a Disallow directive for the pages you want to remove. If the robot finds other directives in robots.txt for the pages specified in Yandex.Webmaster, then, despite them, it will delete the pages from the search.

- Set the switch to By prefix.

- Specify prefix:

You can send up to 20 instructions for one site per day.

- Click the button Delete.

Statuses after sending URL

How to return a page to search results

Remove directives prohibiting indexing: in the robots.txt file or the noindex meta tag. The pages will return to the search results when the robot crawls the site and learns about the changes. This may take up to three weeks.