Computer vision lectures. How computer vision is changing markets

So, computer vision is a set of techniques that allow you to train a machine to extract information from an image or video. In order for a computer to find certain objects in images, it must be trained. To do this, a huge training sample is compiled, for example, from photographs, some of which contain the desired object, while the other part, on the contrary, does not. Next, machine learning comes into play. The computer analyzes the images from the sample, determines which features and their combinations indicate the presence of the desired objects, and calculates their significance.

After completing the training, computer vision can be used in practice. For a computer, an image is a collection of pixels, each of which has its own brightness or color value. In order for the machine to get an idea of the contents of the picture, it is processed using special algorithms. First, potentially significant locations are identified. This can be done in several ways. For example, the original image is subjected to Gaussian blur several times using different blur radii. The results are then compared with each other. This allows you to identify the most contrasting fragments - bright spots and broken lines.

Once significant places are found, the computer describes them in numbers. Recording a fragment of a picture in numerical form is called a descriptor. Using descriptors, you can fairly accurately compare image fragments without using the fragments themselves. To speed up calculations, the computer clusters, or distributes descriptors into groups. Similar descriptors from different images fall into the same cluster. After clustering, only the number of the cluster with descriptors most similar to the given one becomes important. The transition from a descriptor to a cluster number is called quantization, and the cluster number itself is called a quantized descriptor. Quantization significantly reduces the amount of data that a computer needs to process.

Based on quantized descriptors, the computer can compare images and recognize objects in them. It compares sets of quantized descriptors from different images and infers how much they or their individual fragments similar. This comparison is also used search engines to search by downloaded image.

Facial recognition in Russia

Where and why do they want to use it?

Mass events

NtechLab has developed a camera system that... She recognizes violators and sends their photographs to the police. The police will also have hand-held cameras to photograph suspicious people, recognize their faces and find out from databases who they are.

Cameras with facial recognition are being tested in the Moscow metro. They scan the faces of 20 people per second and check them against databases of wanted people. If there is a match, the cameras send data to the police. For 2.5 months the system was wanted. It is known that such cameras exist, but perhaps they were installed at other stations.

Otkritie Bank launched a facial recognition system at the beginning of 2017. It compares the visitor's face with a photo in the database. The system is needed to serve customers faster, but how exactly is not specified. In the future, Otkritie wants to use the system for remote identification. A similar system, but developed by Rostelecom, should appear in 2018.

The main thing is the algorithm

What technology allows machines to recognize faces

Sergey Milyaev

Computer vision is algorithms that extract high-level information from images and videos, thereby automating some aspects of human visual perception. Computer vision for a machine, just like normal vision for a person, is a means of measuring and obtaining semantic information about the observed scene. With its help, the machine receives information about the size of the object, what shape it is and what it is.

Camera with OpenCV computer vision algorithm monitors children on the playground

Everything works based on neural networks

How exactly does facial recognition work, with an example

Sergey Milyaev: Machines do this most efficiently based on machine learning, that is, when they make a decision based on some parametric model without an explicit description of all the necessary decision rules program code. For example, for face recognition, a neural network extracts features from an image and obtains a unique representation of each person’s face, which is not affected by the orientation of his head in space, the presence or absence of a beard or makeup, lighting, age-related changes, and so on.

Computer vision does not reproduce the human visual system, but only simulates certain aspects to solve various problems

Sergey Milyaev

Leading researcher at VisionLabs

The most common computer vision algorithms today are based on neural networks, which, with the increase in processor performance and data volume, have demonstrated high potential for solving a wide range of problems. Each fragment of the image is analyzed using filters with parameters that the neural network uses to search for characteristic features of the image.

Example

The layers of the neural network sequentially process the image, with each subsequent layer calculating more and more abstract features, and the filters on the last layers can see the entire image. When recognizing faces in the first layers, the neural network determines simple features like boundaries and facial features, then in deeper layers filters can identify more complex features - for example, two circles next to each other will most likely mean that these are eyes, and so on.

OpenCV computer vision algorithm determines how many fingers are shown to it

The computer knows when it's being lied to

Can a person fool a very smart computer, three examples

Oleg Grinchuk

Lead Researcher at VisionLabs

Fraudsters may try to either impersonate another person in order to gain access to their accounts and data, or trick the system so that it cannot recognize them in the first place. Let's consider both options.

Photo, video of another person, or printed mask

The VisionLabs platform combats these methods of deception by checking for liveness, that is, it checks that the object in front of the camera is alive. This could be, for example, interactive liveness, when the system asks a person to smile, blink, or bring the camera or smartphone closer to their face.

The set of checks is impossible to predict, since the platform creates a random sequence with tens of thousands of combinations - it is unrealistic to record thousands of videos with the desired combinations of smiles and other emotions. And if the camera is equipped with near-infrared sensors or a depth sensor, then they transmit to the system additional information, which helps to determine from one frame whether the person in front of it is real.

In addition, the system analyzes the reflection of light from different textures, as well as the environment of the object. So it is almost impossible to deceive the system in this way.

In this case, in order for the fraudster to reproduce a copy sufficient to gain access, he needs to have access to the source code and, based on the system's reactions to changes in appearance with makeup, gradually change it to become an exact copy of the other person.

The attacker needs to hack precisely the logic and principle of verification. But for a third-party user, it’s just a camera, a black box, looking at which it is impossible to understand what kind of verification option is inside. Moreover, the factors for checking differ from case to case, so you cannot use any universal algorithm for hacking.

If there are several recognition errors, the system sends a warning signal to the server, after which the attacker’s access is blocked. So even in the unlikely event of having access to the code, it is difficult to hack the system, since the attacker cannot endlessly change his appearance until recognition occurs.

Large sunglasses, cap, scarf, cover your face with your hand

The system will not be able to recognize a person if most of his face is hidden, even though the neural network recognizes faces much better than a person. But in order to completely hide from the facial recognition system, a person must always cover his face from the cameras, and this is quite difficult to implement in practice.

Computer vision is superior to human vision

What exactly and why, with an example

Yuri Minkin

Computer vision systems are similar in basic operating principles to human vision. Like humans, they have devices that are responsible for collecting information, these are video cameras, an analogue of the eyes, and its processing - a computer, an analogue of the brain. But computer vision has a significant advantage over human vision.

A person has a certain threshold for what they can see and what information they can extract from an image. This threshold cannot be exceeded purely for physiological reasons. And computer vision algorithms will only improve. They have limitless possibilities for training

Yuri Minkin

Head of Cognitive Technologies Department

A good example is computer vision technology in self-driving cars. If one person can teach his knowledge about the road situation to only a small, significantly limited number of people, then machines can immediately transfer all existing experience in detecting certain objects to all new systems that will be installed on a fleet of thousands or even millions of cars.

Example

At the end of last year, Cognitive Technologies specialists conducted experiments comparing human capabilities and artificial intelligence in problems of detecting objects in a road scene. And now, in some cases, AI is not only not inferior, but even superior to human capabilities. For example, it was better at recognizing road signs when they were partially obscured by tree foliage. Computers are used in court

Can a computer testify against a person?

Sergey Izrailit: Currently, the law specifically regulates the use of data “obtained from computers” for use as evidence of some significant circumstances, including offenses, only for certain cases. For example, the use of cameras that recognize license plates of cars that violate the speed limit is regulated.

In general, such data can be used along with any other evidence that the investigation or court can either take into account or reject. At the same time, procedural legislation establishes a general procedure for working with evidence - an examination, within the framework of which it is established whether the presented record really confirms some facts or whether the information was distorted in one way or another.

Computer vision and image recognition are an integral part of (AI), which has gained immense popularity over the years. In January of this year, the CES 2017 exhibition took place, where you could look at the latest achievements in this area. Here are some interesting examples of the use of computer vision that could be seen at the exhibition.

8 examples of using computer vision

Veronica Elkina1. Self-driving cars

The largest stands with computer vision belong to the automotive industry. After all, self-driving and semi-autonomous car technologies work largely because of computer vision.

Products from NVIDIA, which has already made big strides in the field of deep learning, are used in many self-driving cars. For example, the NVIDIA Drive PX 2 supercomputer already serves as the underlying platform for self-driving cars, Volvo, Audi, BMW and Mercedes-Benz.

NVIDIA's DriveNet artificial perception technology is self-learning computer vision powered by neural networks. With its help lidars, radars, cameras and ultrasonic sensors capable of recognizing environment, road markings, transport and much more.

3. Interfaces

Eye tracking technology using computer vision is used not only in gaming laptops, but also in desktop and enterprise computers so that they can be controlled by people who cannot use their hands. The Tobii Dynavox PCEye Mini is a ballpoint pen-sized device that makes the perfect discreet accessory for tablets and laptops. This eye tracking technology is also used in new gaming and regular Asus laptops and Huawei smartphones.

Meanwhile, gesture control (computer vision technology that can recognize specific hand movements) continues to develop. It will now be used in future BMW and Volkswagen vehicles.

The new HoloActive Touch interface allows users to control virtual 3D screens and press buttons in space. We can say that it is a simple version of the real Iron Man holographic interface (it even reacts in the same way with a slight vibration when pressing elements). Technologies like ManoMotion will make it easy to add gesture controls to almost any device. Moreover, to gain control over a virtual 3D object using gestures, ManoMotion uses a regular 2D camera, so you don’t need any additional equipment.

eyeSight's Singlecue Gen 2 device uses computer vision (gesture recognition, facial analysis, action detection) and allows you to control TVs, smart lighting systems and refrigerators using gestures.

Hayo

Crowdfunding project Hayo is perhaps the most interesting new interface. This technology allows you to create virtual controls throughout your home - by simply raising or lowering your hand, you can increase or decrease the volume of your music, or turn on the kitchen lights by waving your hand over the countertop. It all works thanks to a cylindrical device that uses computer vision, as well as a built-in camera and 3D, infrared and motion sensors.

4. Household appliances

Expensive cameras that show you what's inside your refrigerator don't seem so revolutionary anymore. But what about an app that analyzes images from your refrigerator's built-in camera and tells you when you're low on certain foods?

Smarter's sleek FridgeCam device attaches to the side of your refrigerator and can detect expiration dates, tell you what's in the fridge, and even recommend recipes for selected foods. The device is sold at an unexpected price affordable price- for only $100.

5. Digital signage

Computer vision could change the way banners and advertisements look in stores, museums, stadiums and amusement parks.

A demo version of the technology for projecting images onto flags was presented at the Panasonic stand. Using infrared markers invisible to the human eye and video stabilization, this technology can project advertising onto hanging banners and even flags fluttering in the wind. Moreover, the image will look as if it were actually printed on them.

6. Smartphones and augmented reality

Many have talked about the game as the first mainstream AR (AR) app. However, like other apps trying to jump on the AR train, this game relied more on GPS and triangulation to give users the feeling that the object was right in front of them. Typically, smartphones have little to no real computer vision technology.

However, in November, Lenovo released Phab2, the first smartphone to support Google Tango technology. The technology is a combination of sensors and computer vision software that can recognize images, videos and the world around them in real time through a camera lens.

At CES, Asus debuted the ZenPhone AR, a smartphone that supports Tango and Google's Daydream VR. Not only can the smartphone track motion, analyze the environment and accurately determine position, but it also uses the Qualcomm Snapdragon 821 processor, which allows you to distribute the loading of computer vision data. All this helps to use real augmented reality technologies that actually analyze the situation through the smartphone camera.

Later this year, the Changhong H2 will be released, the first smartphone with a built-in molecular scanner. It collects light that bounces off an object and splits it into a spectrum, and then analyzes its chemical composition. Thanks to software, using computer vision, the information obtained can be used for various purposes - from prescribing medications and counting calories to determining the condition of the skin and calculating the level of nutrition.

| A conference on big data will be held in Moscow on September 15 Big Data Conference. The program includes business cases, technical solutions and scientific achievements of the best specialists in this field. We invite everyone who is interested in working with big data and wants to apply it in real business. Follow the Big Data Conference on |

How to teach a computer to understand what is shown in a picture or photograph? It seems simple to us, but to a computer it is just a matrix of zeros and ones from which you need to extract important information.

What is computer vision? This is the computer's ability to "see"

Vision is an important source of information for humans; with its help we receive, according to various sources, from 70 to 90% of all information. And, naturally, if we want to create a smart machine, we need to implement the same skills in a computer.

The computer vision problem can be formulated rather vaguely. What is “seeing”? This is understanding what is located where just by looking. This is the difference between computer vision and human vision. Vision for us is about the world, as well as a source of metric information - that is, the ability to understand distances and sizes.

Semantic core of the image

Looking at an image, we can characterize it according to a number of characteristics, so to speak, extract semantic information.

For example, looking at this photo, we can tell that it is outdoors. That this is a city, street traffic. That there are cars here. Based on the configuration of the building and the hieroglyphs, we can guess that this is Southeast Asia. From the portrait of Mao Zedong we understand that this is Beijing, and if anyone has seen the video broadcasts or visited there themselves, they can guess that this is the famous Tiananmen Square.

What else can we say about the picture by looking at it? We can select objects in the image, say, there are people there, there is a fence closer here. Here are the umbrellas, here is the building, here are the posters. These are examples of classes of very important objects that are currently being searched for.

We can also extract some features or attributes of objects. For example, here we can determine that this is not a portrait of some ordinary Chinese, namely Mao Zedong.

You can determine from a car that it is a moving object, and it is rigid, that is, it does not deform during movement. We can say about flags that these are objects, they also move, but they are not rigid, they are constantly deformed. There is also wind in the scene, this can be determined by the fluttering flag, and you can even determine the direction of the wind, for example, it blows from left to right.

The meaning of distances and lengths in computer vision

Metric information is very important in the science of computer vision. These are all kinds of distances. For example, for a Mars rover this is especially important, because commands from Earth take about 20 minutes and the response takes about 20 minutes. Accordingly, the round trip connection is 40 minutes. And if we draw up a movement plan according to the Earth’s commands, then we need to take this into account.

Computer vision technologies have been successfully integrated into video games. From videos you can build three-dimensional models of objects and people, and from user photographs you can reconstruct three-dimensional models of cities. And then walk through them.

Computer vision is a fairly broad field. It is closely intertwined with various other sciences. Partially computer vision captures an area and sometimes highlights the area of computer vision, historically it has happened this way.

Analysis, pattern recognition - the path to creating a higher mind

Let's look at these concepts separately.

Image processing is a field of algorithms in which the input and output are an image, and we already do something with it.

Image analysis is a field of computer vision that focuses on working with a two-dimensional image and drawing conclusions from it.

Pattern recognition is an abstract mathematical discipline that recognizes data in the form of vectors. That is, the input is a vector and we need to do something with it. Where this vector comes from is not so important for us to know.

Computer vision was originally about reconstructing structure from two-dimensional images. Now this area has become wider and can be generally interpreted as making decisions about physical objects based on an image. That is, artificial intelligence.

In parallel with computer vision, in a completely different area, in geodesy, photogrammetry developed - this is the measurement of distances between objects using two-dimensional images.

Robots can "see"

And the last thing is machine vision. Machine vision refers to the vision of robots. That is, solving some production problems. We can say that computer vision - this is one big science. It partially combines some other sciences. And when computer vision receives a specific application, it turns into computer vision.

The field of computer vision has many practical applications. It is related to production automation. In enterprises, it is becoming more efficient to replace manual labor with machine labor. The machine does not get tired, does not sleep, has an irregular work schedule, and is ready to work 365 days a year. This means that using machine labor, we can get a guaranteed result at a certain time, and this is quite interesting. All tasks for computer vision systems have visual applications. And there is nothing better than seeing the result immediately from the picture, only at the calculation stage.

On the threshold to the world of artificial intelligence

Plus the area is difficult! A significant part of the brain is responsible for vision, and it is believed that if you teach a computer to “see”, that is, to fully apply computer vision, then this is one of the complete tasks of artificial intelligence. If we can solve a problem at the human level, we will likely solve an AI problem at the same time. Which is very good! Or not very good if you watch Terminator 2.

Why is vision difficult? Because the image of the same objects can vary greatly depending on external factors. Objects look different depending on the observation points.

For example, the same figure taken from different angles. And what’s most interesting is that the figure can have one eye, two eyes, or one and a half. And depending on the context (if this is a photo of a person in a T-shirt with drawn eyes), then there may be more than two eyes.

The computer doesn’t understand yet, but it already “sees”

Another factor that creates difficulties is lighting. The same scene with different lighting will look different. The size of objects may vary. Moreover, objects of any classes. Well, how can you say about a person that he is 2 meters tall? No way. A person’s height can be 2.3 m or 80 cm. Like objects of other types, nevertheless, these are objects of the same class.

Especially living objects undergo a wide variety of deformations. Hair of people, athletes, animals. Look at pictures of running horses; it is simply impossible to determine what is happening to their mane and tail. What about the overlap of objects in the image? If you slip such a picture into a computer, even the most powerful machine will find it difficult to come up with the correct solution.

The next type is camouflage. Some objects and animals disguise themselves as their environment, and quite skillfully. And the spots and colors are the same. But nevertheless, we see them, although not always from afar.

Another problem is movement. Objects in motion undergo unimaginable deformations.

Many objects are very changeable. For example, in the two photos below there are objects like “chair”.

And you can sit on this. But teaching a machine that such different things in shape, color, and material are all the object “chair” is very difficult. This is the task. Integrating computer vision methods means teaching a machine to understand, analyze, and guess.

Integration of computer vision into various platforms

Computer vision began to penetrate the masses back in 2001, when the first face detectors were created. Two authors did this: Viola, Jones. It was the first fast and fairly reliable algorithm that demonstrated the power of machine learning methods.

Now computer vision has a fairly new practical application - human face recognition.

But recognizing a person, as shown in films - from arbitrary angles, with different lighting conditions - is impossible. But to solve the problem, is it one or different people with different lighting or in different poses, similar to the passport photo, you can have a high degree of confidence.

The requirements for passport photographs are largely due to the peculiarities of facial recognition algorithms.

For example, if you have a biometric passport, then at some modern airports you can use automatic system passport control.

computer vision is the ability to recognize arbitrary text

Perhaps someone used a text recognition system. One of these is Fine Reader, a very popular system on the RuNet. There are many forms where you need to fill out data, they scan perfectly, the information is recognized by the system very well. But with arbitrary text on an image, the situation is much worse. This task still remains unsolved.

Games involving computer vision, motion capture

A separate large area is the creation of three-dimensional models and motion capture (which is quite successfully implemented in computer games). The first program that uses computer vision is a system for interacting with a computer using gestures. During its creation, a lot of things were discovered.

The algorithm itself is quite simple, but to set it up it was necessary to create a generator of artificial images of people in order to get a million pictures. With their help, the supercomputer selected the parameters of the algorithm according to which it now works best.

That’s how a million images and a week of supercomputer calculation time made it possible to create an algorithm that consumes 12% of the power of one processor and allows you to perceive a person’s pose in real time. This Microsoft system Kinect (2010).

Searching for images by content allows you to upload a photo into the system, and based on the results, it will display all images with the same content and taken from the same angle.

Examples of computer vision: 3D and 2D maps are now made using it. Maps for car navigators are regularly updated based on data from DVRs.

There is a database with billions of geotagged photos. By loading a photo into this database, you can determine where it was taken and even from what angle. Naturally, provided that the place is popular enough that tourists visited it at one time and took a number of photographs of the area.

Robots are everywhere

Robotics is everywhere these days, you can’t live without it. Now there are cars that have special cameras that recognize pedestrians and road signs in order to transmit commands to the driver (this is in a sense computer program for vision, helping the car enthusiast). And there are fully automated robot cars, but they can't rely on a camera system alone without using a lot of additional information.

A modern camera is an analogue of a pinhole camera.

Let's talk about digital image. Modern digital cameras are designed on the principle of a pinhole camera. Only instead of a hole through which a beam of light penetrates and projects the outline of an object on the back wall of the camera, we have a special optical system called a lens. Its task is to collect a large beam of light and transform it in such a way that all rays pass through one virtual point in order to obtain a projection and form an image on a film or matrix.

Modern digital cameras (matrix) consist of individual elements - pixels. Each pixel allows you to measure the total energy of light that falls on this pixel, and output one number. Therefore, in a digital camera, instead of an image, we receive a set of measurements of the brightness of the light entering a single pixel - computer ones. Therefore, when we enlarge the image, we see not smooth lines and clear contours, but a grid of squares - pixels - colored in different tones.

Below you can see the world's first digital image.

But what's missing from this image? Color. What is color?

Psychological perception of color

Color is what we see. The color of an object, the same object, will be different for a person and a cat. Since we (humans) and animals have different optical systems - vision. Therefore, color is a psychological property of our vision that arises when observing objects and light. And not a physical property of the object and light. Color is the result of the interaction between the components of light, the scene, and our visual system.

Programming Computer Vision in Python Using Libraries

If you decide to seriously study computer vision, you should immediately prepare for a number of difficulties; this science is not the easiest and hides a number of pitfalls. But Programming Computer Vision in Python by Jan Erik Solem is a book that puts everything into the simplest possible language. Here you will become familiar with methods for recognizing various objects in 3D, learn how to work with stereo images, virtual reality and many other computer vision applications. There are plenty of examples in the book Python language. But the explanations are presented, so to speak, in a generalized manner, so as not to overload with too scientific and heavy information. The work is suitable for students, amateurs and enthusiasts. You can download this book and others about computer vision (pdf format) online.

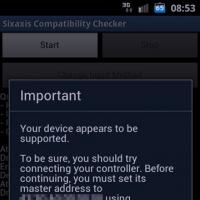

Currently, there is an open library of computer vision algorithms, as well as image processing and numerical algorithms OpenCV. It is implemented in most modern programming languages and is open source. If we talk about computer vision, using Python as a programming language, then this library also has support, in addition, it is constantly developing and has a large community.

Microsoft provides its Api services that can train neural networks to work specifically with facial images. It is also possible to use computer vision using Python as a programming language .